AI

The idea of AI goes back to ancient Greece where the notion of automaton refers to machines’ ability to act of its own will. In 1950, Alan Turing published “Computer Machinery and Intelligence” which became the Turing Test to determine if a machine can think intelligently.

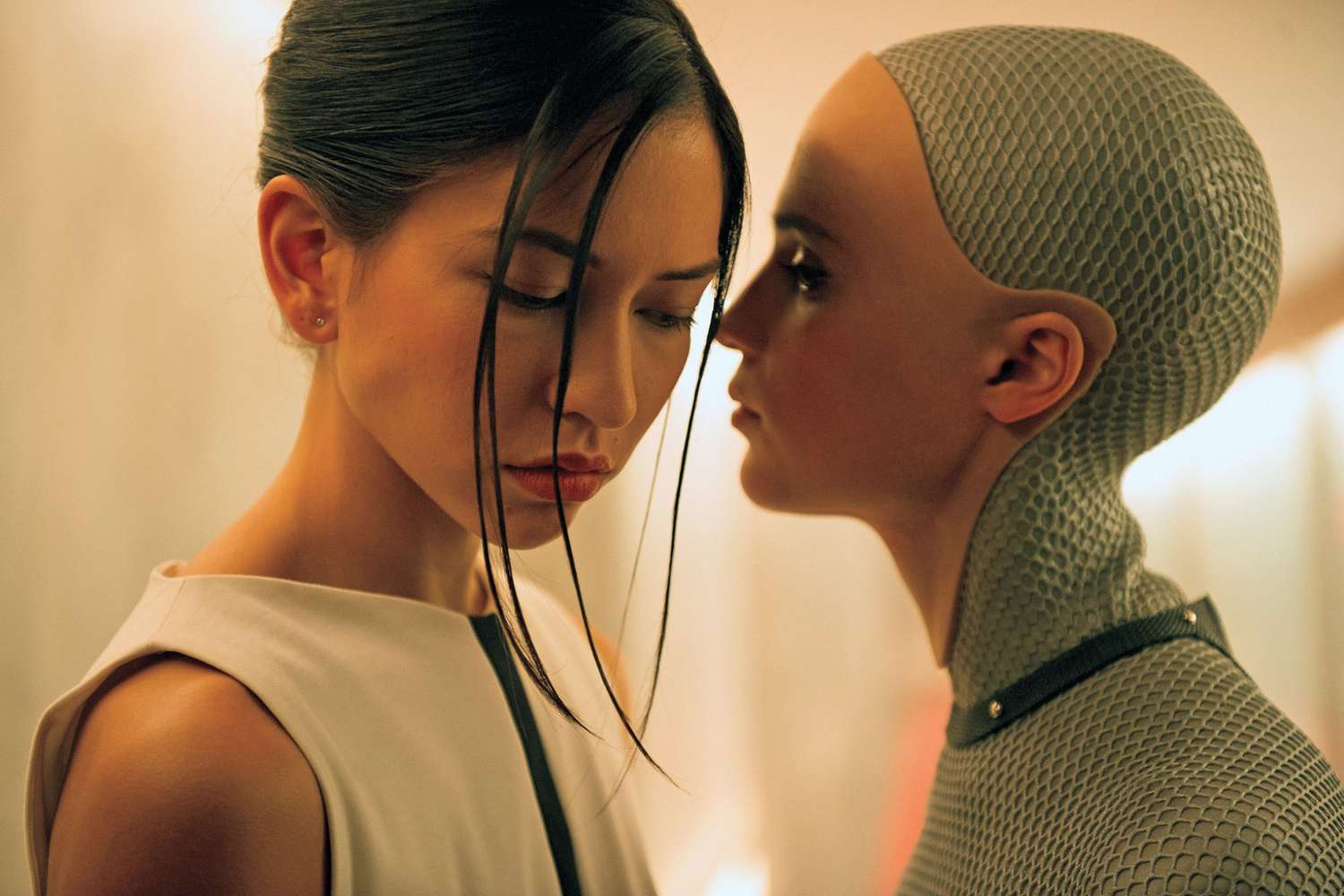

In fiction, AI and humanity are often assumed to be two distinct entities, while AI, like prosthetic limbs, has become part of what it means to be human. One of the prevalent tropes is AI turning against or replacing humanity. Another involves mistreatment of artificial people since they are purportedly “not real.”

Generative AI tools stake claims to anonymized, collective authorship through machine-generated texts that are similar to patterns in the datasets they trained on. The notion of authorship faces new challenges of delineating the agency, knowability, and intentionality of written words.

Scarcity of non-English datasets leads to Anglocentric cultural biases. Further, AI can accelerate digital colonization and labor exploitation. AI developed and controlled by dominant corporate and political entities in the Global North can significantly impact social well-being in the Global South.

AI guardrails are safety mechanisms offering guidelines and boundaries to ensure that AI applications are being developed and aligned to meet ethical standards and societal expectations.

Technologists often celebrate technological innovation as revolutions that would improve our lives. AI is indeed transformative, but guardrails need to be in place to harness its power to enhance equity and inclusion.