History of Artificial Intelligence

AI may seem to be the holy grail of automation, but the idea of autonomous machines goes back to ancient Greece where the notion of automaton refers to machines’ ability to act of its own will. Today, educators debate about AI’s impact on students’ development of critical skills. In Greece, philosophers debated about the demerit of another type of “technology”: writing. In Plato’s Phaedrus, Socrates insists that writing erodes memory and weakens the mind (Walter Ong, Orality and Literacy, 2002). People relied less on their own memories when the written word allows for storage and retrieval of information and the organization of thoughts. The invention of printing presses brought new promises and “threads.”

In 1950, Alan Turing published “Computer Machinery and Intelligence” in which he asked various versions of the question “can machines think?” The study became the foundation for the Turing Test to determine if a machine can think intelligently. The test does not involve scrutiny of “humanity” but rather is, in Turing’s words, a thought experiment called the imitation game. If a machine can simulate certain situations to “fool” a human user into thinking that it is a human, it has succeeded.

Artificial Intelligence (AI) is an umbrella term covering a set of technologies with self-governing protocols. Examples range from predictive texts in autocomplete typing tools, algorithmic ranking mechanisms commonly found in search engines, and semi-automated route calculation in Google Map.

There has been over a century of development of various forms of AI, ranging from a robotic mouse capable of navigating a labyrinth in 1950 to a purpose-built IBM supercomputer that could play chess in 1985.

Generative AI

Since late 2022, one of the most prevalent types of AI has been generative AI (GAI), which refers to algorithmic machine-learning models that are programmed to generate texts or images that resemble the patterns in the datasets they trained on. Based on users’ textual or image prompts, these models appear to be capable of simulating human speech and writing, translating some texts, crafting images of many styles, and generating computer code. In short, generative AI is a machine-learning model that is trained to “create new data, rather than making a prediction about a specific dataset” (Adam Zewe, MIT News, 2023).

The research laboratory OpenAI transitioned from non-profit to a for-profit company in 2019 and released, in December 2022, a free preview of ChatGPT, which can converse with users by simulating human speech. This chatbot was based on their GPT-3.5, a group of generative pre-trained transformers trained on large language model (LLM) datasets. The acronym GPT stands for Generative Pre-trained Transformer.

Since the bot performs conversational tasks through a user-friendly interface with a very low barrier for entry, ChatGPT has gained wide-spread media coverage and a large user base. Multiple applications have been built around it, including Google’s Bard (now Gemini) and Microsoft’s Sydney (Copilot) within the Bing search engine. In the early months of GPT’s public release, there was wide-spread perception of its threat to education within the humanities sector of higher education.

AI-generated texts have saturated the Internet. Sports Illustrated has already published articles by fictional authors, with fictional bios.

On the other side of the equation, AI may be deployed to enhance linguistic parity, productivity, and higher education.

Current technological limitations mean that significant human curatorial and editorial labor is required, in the form of prompt engineering or in “post production,” to ensure high quality outputs by this type of AI. Students and journalists often overlook these limitations.

ChatGPT’s outputs are polished enough to have triggered bifurcated responses from multiple communities. Writers and educators took turns to pronounce the death of college essays in sensationalist tones (Marche) and to declare allegiance to the new AI tool as the latest savior of higher education (O’Shea). Within higher education, conversations about ChatGPT tend to focus on detecting new forms of plagiarism.

However, “hype leads to hubris, and hubris leads to conceit, and conceit leads to failure,” cautioned Rodney Brooks, professor emeritus at MIT and founder of Robust.AI. “No one technology has ever surpassed everything else,” he concluded.

Hype Culture vs. Substantive Issues

ChatGPT’s performative and simulation acts are polished enough to have triggered bifurcated responses from multiple communities. The hype is driven less by the merits of the technology and more by investors and incentives rooted in market realities, such as stock prices and OpenAI’s subscription plan for premium use (ChatGPT Plus). The hype characterizes generative AI as either a devil or an angel in binary terms. The reality is muddy.

Meanwhile, a controlled study at MIT suggests that ChatGPT helped increase productivity in “mid-level professional writing tasks” such as marketing (Noy and Zhang 11). Within the arts and humanities, conversations about ChatGPT tend to focus on detecting new forms of plagiarism, as evidenced by a podcast episode of the Folger Library’s Shakespeare Unlimited series (2023) among other publications.

While this AI technology excels in pattern recognition and reproduction, it has several shortcomings in the context of expository writing in the realm of humanistic research. It has a tendency to produce and confidently reassert factual errors (Borji). It lacks any aggregated domain knowledge and is often incompetent in context-specific operations (Peng et al). Its outputs, from humanistic perspectives, can be formulaic, generic and repetitive. It is incapable of natural language processing in the context of critical thinking (Qin et al 11). It is incapable of symbolic and inductive reasoning (Qin et al, 2) as well as moral reasoning.

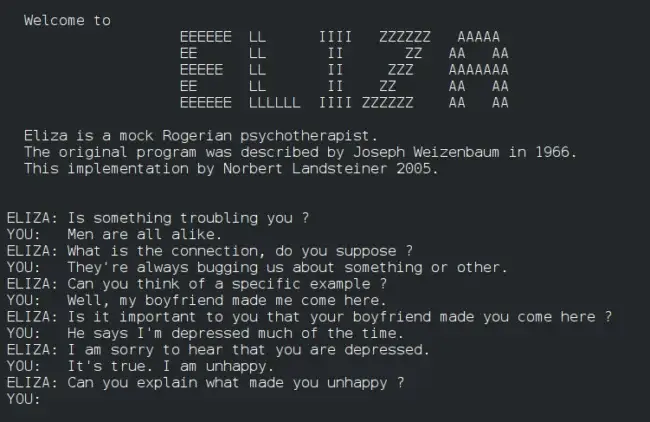

ChatGPT is often touted as being unprecedented. There are in fact many predecessors to chatbots, even if they were less sophisticated. Among its predecessors is a famous MIT program called ELIZA.

ELIZA was a computer program that simulated conversation by using a pattern matching and substitution methodology. It was developed from 1964 to 1967 at MIT by Joseph Weizenbaum. It is an example of early AI platforms for conversational exchange. Here is a screenshot of ELIZA at work during a conversation with a user. When running the DOCTOR script, ELIZA simulated a psychotherapist of the Rogerian school. A Rogerian therapist would repeat the patient’s words back to the patient and reflect on the words. Thus ELIZA created conversational interactions that were similar to what would take place in the office of a non-directive “psychotherapist in an initial psychiatric interview.” The purpose of ELIZA was to “demonstrate that the communication between man and machine was superficial.”

Limitations of generative AI

At its core, such generative AI is “a lumbering statistical engine for pattern matching” and for “extrapolating the most likely conversational response” to a question without positing “any causal mechanisms” beyond “description and prediction,” as linguists have pointed out (Chomsky, Roberts, and Watumull). Further, these probabilistic models operate as black box devices—systems that produce information without revealing its internal workings. They generally lack model interpretability in that human coders are unable to fully explain or predict the AI’s output.

Limitations of Anthropocentrism

The other limitation lies in that inform human-machine relations. Anthropocentric thinking regards humankind as the center of existence and overlooks humans’ roles in biological and computational ecosystems. Current benchmarks to evaluate AI models overemphasize educational milestones, such as “PhD-level intelligence.” Since AI models are non-human entities, their efficacy should not be measured against human cognition. These industrial benchmarks are inadequate because they are uneven and ill-defined.

Does ChatGPT have disabilities?

If one insists on measuring the developmental stages of AI against normative human development, an able-bodied concept, one might end up concluding, for instance, that ChatGPT has disabilities. As a computer program interacting with humans through a textual interface, it cannot see or hear the human users. It cannot “overhear” what the users say orally about it. It only “perceives” humans’ presence via textual input. It does not have limbs, and it does not have all the five senses. This thought experiment shows the shortfalls of anthropocentric metaphors for the relations between humans and non-human entities.

Coda

The history of artificial intelligence is part of the larger history of human-machine relations and cultural attitudes towards technology. We can learn many lessons from the history of technology. For instance, as Jeff Jarvis argues in The Gutenberg Parenthesis, the era of the printing press holds important lessons for our era of AI in terms of power relations: “The history of printing is a history of power. The story of print is one of control, of attempts to manage the tool and fence in the thoughts it conveyed, to restrict who may speak and what they may say through gatekeepers, markets, edicts, laws, and norms” (8).

We now live in a algorithmic-governed inquiry-driven culture (in plain English: the era of Internet search engines), but there is a long history of rules and automation. Algorithm simply means a set of rules to be followed in problem-solving operations, especially by but not limited to a computer. Human societies have always lived with ancestral, cultural, and legal rules and regulations.

Does AI Understand Language?

According to linguists, AI cannot yet understand semantic meanings and conduct natural language processing. Let us take a look at the following 30-minute interview where linguist Noam Chomsky joins entrepreneur Gary Marcus for a wide-ranging discussion that touches on why the myths surrounding AI are so dangerous, the inadvisability of relying on artificial intelligence tech as a world-saver, and where it all went wrong. Professor Chomsky is widely regarded as a founding figure of modern linguistics.

Your Turn

Name, and analyze, one form of technology of representation that remediates, imitates, or re-packages an older form of technology. Technologies of representation are media, social and technical affordances (tools) that mediate the content and form for communication. This type of technology is part of the processes that create cultural meanings, such as theatre, cinema, the printing press, radio, television, and more.

One example, of course, is AI. Generative AI may seem entirely new, but it reframes older technologies of representation. Within media studies, Jay David Bolter and Richard Grusin‘s theory of remediation holds that a defining characteristic of new digital media is remediation, the representation of one medium in another (5 and 24). New media remix and compete with older media. Generative AI represents data of one medium (datasets that are not humanly possible to digest) in another medium (succinct, human-like conversations). It therefore “remediates” human narratives as well as perform various versions of collective consciousness of the publics.

Another example is the Jaquet-Droz eighteenth-century automata currently in the Musée d’Art et d’Histoire of Neuchâtel, Switzerland. These automata are considered ancestors of modern computers and contemporary automation technologies.

Pierre Jaquet-Droz and his son Henri-Louis, along with Jean-Frédéric Leschot, built three doll automata musician, writer, and draughtsman between 1768 and 1774. These were intended as sensational advertisement to improve the sales of watches to aristocrats.

The musician plays a custom-built instrument by pressing the keys with her fingers. The draughtsman can draw four different images, including a portrait of Louis XV, a dog, Marie Antoinette and Louis XVI, and Cupid on a chariot.

The writer is almost a precedence of our contemporary generative AI. The writer automata can write any custom text of up to 40 letters long as coded on a wheel. He writes with a quill pen, which he inks from time to time.

Take a look at the following short documentary by BBC.

Further Reading

Some of these readings are open access; others can only be accessed using George Washington University credentials.

Bolter, Jay David, and Richard Grusin, Introduction, in Remediation: Understanding New Media (MIT Press, 2000), pp. 3-15.

Jarvis, Jeff. Chapter 1 of The Gutenberg Parenthesis: The Age of Print and Its Lessons for the Age of the Internet (Bloomsbury, 2023).

Oppy, Graham and David Dowe, “The Turing Test“, The Stanford Encyclopedia of Philosophy (Winter 2021 Edition), ed. Edward N. Zalta.

Turan, Alan. “Computing Machinery and Intelligence,” Mind Volume LIX, Issue 236 (October 1950): 433–460.